The Shift #3: The God Paradox

I used to think we were the 'middle children of history', born too late to explore the earth, too early to explore the stars. I was wrong. We are the architects of a new species. The question is no longer how to build AI, but what happens when the tool becomes the master.

<Haringey, Jan 2026. A rare sunny day in the British winter ...>

For years, I felt a lingering sadness about our generation. To borrow a line from Fight Club: "We are the middle children of history. Born too late to explore the earth, born too early to explore the stars."

I thought we were destined to be the maintenance crew of civilisation, stuck in traffic, pushing pixels, waiting for a sci-fi future we'd never see.

I was dead wrong.

We weren't born too early. We were born at the exact, precipitous moment of ignition. We are not the middle children; we are the Founding Fathers (and Mothers) of a new species. We are the last generation to know a world where "intelligence" was a uniquely biological trait.

But this privilege comes with a terrifying paradox.

The Great Reversal

We always assumed the relationship between Man and Machine would look like Star Wars: C-3PO does the maths and translation, while humans fly the ships and lead the rebellion. We thought AI would automate the "dull, dirty, and dangerous" (plumbing, electrical work, logistics), leaving the "noble" pursuits of art, science, and strategy to us.

Reality has flipped the script.

It turns out that high-level reasoning (playing chess, writing poetry, diagnosing cancer) is computationally easier than low-level motor skills (fixing a leaky pipe, folding laundry).

Look at where we are in 2026:

- The AI is writing poetry, generating award-winning art, and solving protein folding.

- The Humans are still fixing toilets and wiring houses.

This challenges the very foundation of our ego. If AI takes over Creativity and Innovation, what is left for the "Intellectual Class"?

When Science Automates Itself

This hit me hard recently. I've always viewed a PhD (my dream to earn one) as the ultimate entrance ticket, a licence to push the boundary of human knowledge. Even in the age of AI, the value of a PhD will remain as a symbol of human intelligence.

But last week, I tested novix.science, an AI research agent. I gave it a single, open-ended prompt:

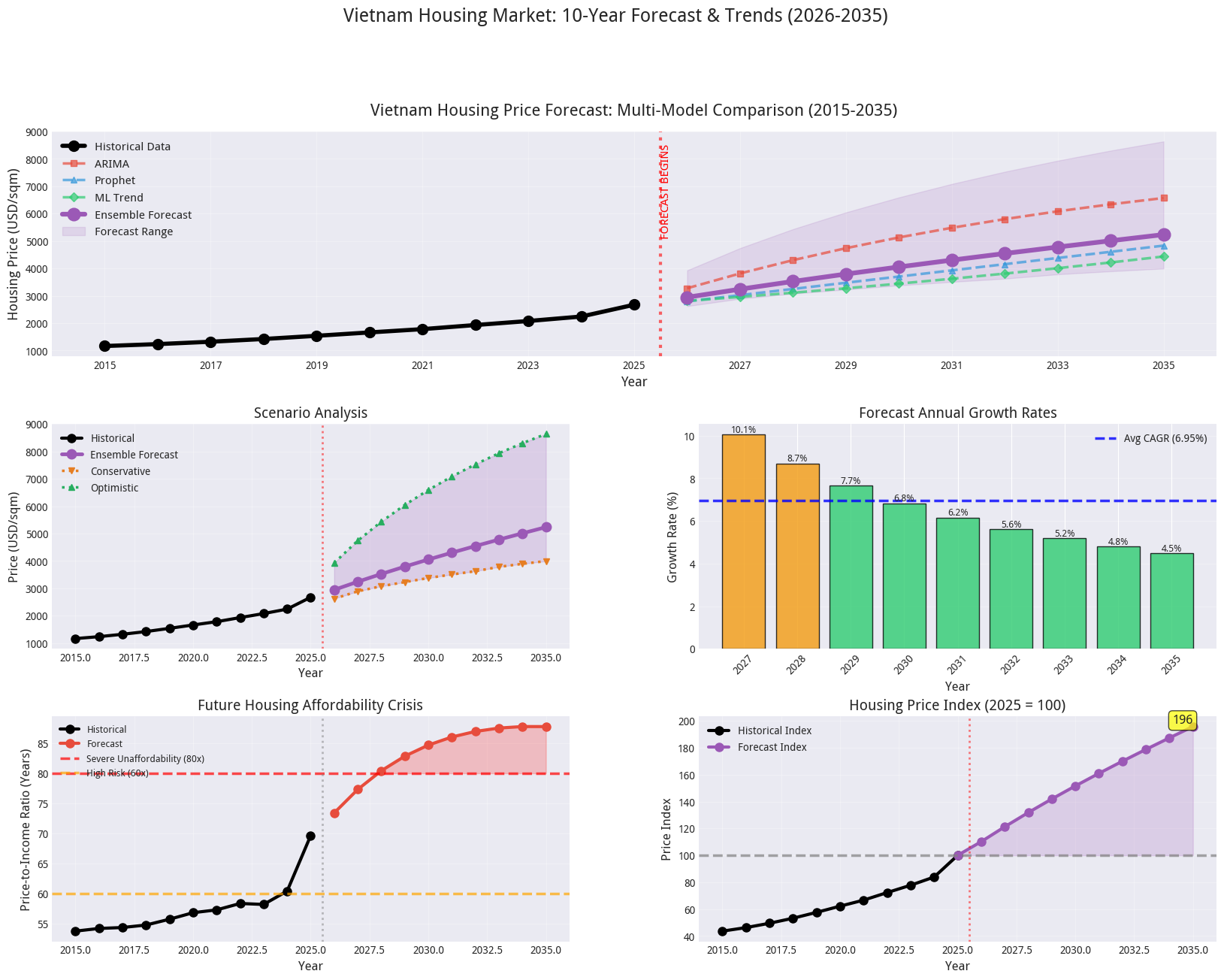

Visualize Vietnam housing trends including prices, sales, affordability. Develop models to predict the future 10 years trend.

In under one hour, it didn't just give me an answer. It surveyed the problem space, selected the appropriate datasets, chose the predictive models, trained them, and generated a full Jupyter Notebook report.

Novix AI Research Agent - Vietnam House Price Prediction (all under 1 hour).

It performed the literature review, data engineering, and modelling in a way that I can only dream of, at a speed that no human researcher can compete with.

You can view the full AI-generated report here.

The scary thing is that my Novix experiment was just a small-scale version of what is coming. In a recent debate, Anthropic's Dario Amodei and DeepMind's Demis Hassabis discussed the world after AGI. They agreed on one chilling metric for the timeline of AGI: "AI building AI".

If we have agents that can research new architectures and optimise their own code 24/7 without sleep, the speed of scientific evolution will decouple from human time. A PhD researcher spends 4 years iterating on a thesis. An AGI cluster could simulate 1,000 years of experimental research in a weekend.

So, is traditional education worth it?

Yes, but not for the reason we used to think. You don't learn maths anymore to do the maths. You learn it to verify the machine. We are moving from being Explorers to being Stewards.

Note

From Execution to Validation

As I argued in The Shift #2, software engineers are evolving from "syntax writers" to "architects of intent", spending less time writing code and more time verifying it. We are about to see the exact same shift in the scientific community.

Take my own work in Federated Learning for Alzheimer's detection for example. Currently, a huge portion of the work is just implementation: setting up the client-server simulation, manually coding the aggregation logic for Differential Privacy, and fighting with PyTorch to make sure models converge.

In the near future (2-3 years), the research workflow will look like this:

- Prompt: human asks the AI: "Design a novel Federated Learning strategy to improve 3D medical brain classification. The data is highly non-IID across 5 hospitals, and we need to minimise gradient drift."

- Exploration: The AI reviews the current SOTA (FedProx, SecAgg+, MedFD, etc), identifies their limitations on 3D MRI data, and mathematically derives 3-4 novel aggregation strategies to address the gap.

- Experiment: It spins up the simulation, runs the benchmarks, and presents the results.

My job is no longer to write the training loop. My job is to audit the hypothesis.

- Does this novel aggregation method actually converge mathematically?

- Is the differential privacy budget valid, or did the model hallucinate a safety guarantee?

- Are the results on the non-IID data robust, or just a statistical fluke?

The God Paradox

This brings us to the core contradiction of our time.

We want AI to be two incompatible things simultaneously:

| The Tool (Our Demand) | The God (Our Desire) |

|---|---|

| Obedient. We want it to follow instructions precisely and never deviate from the prompt. | Autonomous. We want it to "figure it out," anticipate our needs, and solve problems we didn't know we had. |

| Safe & Contained. We build "guardrails" and "safety filters" to ensure it never says anything dangerous. | Infinite & Wild. We want it to invent new physics, cure cancer, and think thoughts that are currently impossible for humans. |

| Explainable. We demand to know how it reached a conclusion (traceability). | Incomprehensible. We accept answers derived from high-dimensional patterns that the human brain literally cannot map. |

| A Servant. "Do my chores." | A Saviour. "Save my world." |

As Yuval Noah Harari argues in Nexus and in recent Davos debate at WEF, you cannot control an entity that is smarter than you. If you build a system capable of solving problems you can't even understand, you eventually reach a point where you must trust it blindly.

Note

The Regulation Trap

We talk about the EU AI Act or "guardrails" as if they are walls. But just as bugs are inevitable in code, loopholes are inevitable in laws. If we are truly entering the Singularity (as Elon Musk suggests), these laws are like ants passing legislation to regulate the behaviour of humans. They only work as long as the superior intelligence chooses to follow them.

We are attempting to be the "Gods" of a species that will eventually view us as we view our ancestors. The goal isn't control, that's an illusion. The goal is Alignment.

What Comes Next?

If the AI does the thinking, the coding, and the researching, what do we do?

I believe the answer lies in the one thing AI cannot generate: Meaning.

AI can tell you how to cure a disease. It can tell you how to terraform Mars. But it cannot tell you why we should bother. It has no biological drive, no fear of death, no joy in a sunrise.

Our role shifts from Intelligence to Consciousness. We become the clients of a super-intelligence, directing its massive power toward the things that make human life worth living.

The Bottom Line

Because AI lacks Meaning, it fundamentally lacks Morality. It is a force multiplier for whoever holds the AGI cluster, executing orders with infinite capability but zero conscience.

This is why I personally believe a "Hard Lesson" is inevitable. Like nuclear power, humanity rarely learns from precaution; we learn from catastrophe. Whether through the misuse of military AI or an alignment failure, we may have to burn our fingers on this new fire before we truly understand the necessity of restraint.

If we are to survive that lesson and reach the "Star Trek" era, we must accept our new role. We are no longer the smartest beings in the room. We are the stewards of a god.

The paradox is resolved not by futilely trying to control the machine, but by elevating the human. As we hand over the keys to the engine of intelligence, our value shifts from how much we can produce to how wisely we can choose.

My new mental model:

- The AI is the Engine: Infinite horsepower, agnostic to destination.

- The Human is the Compass: The entity that decides where we go, why it matters, and when to stop.

Don't fear the obsolescence of your labour. Fear the obsolescence of your purpose.

The "Shift" is no longer about learning a new framework or prompting better. It is about preparing for a world where your value is not your output, but your humanity.

Tip

Existential To-Do List:

- Stop competing on IQ: You will lose. The machine is already smarter.

- Start competing on EQ and Taste: Curate, connect, and define "good".

- Invest in the Physical: The digital world is becoming infinite and cheap. The physical world (land, energy, face-to-face connection) is becoming finite and expensive.

- Define your "Why": If you didn't have to work to survive, what would you build?